The World Development Report 2016, the main annual publication of the World Bank, is out. This year’s theme is Digital Dividends, examining the role of digital technologies in the promotion of development outcomes. The findings of the WDR are simultaneously encouraging and sobering. Those skeptical of the role of digital technologies in development might be surprised by some of the results presented in the report. Technology advocates from across the spectrum (civic tech, open data, ICT4D) will inevitably come across some facts that should temper their enthusiasm.

While some may disagree with the findings, this Report is an impressive piece of work, spread across six chapters covering different aspects of digital technologies in development: 1) accelerating growth, 2) expanding opportunities, 3) delivering services, 4) sectoral policies, 5) national priorities, 6) global cooperation. My opinion may be biased, as somebody who made some modest contributions to the Report, but I believe that, to date, this is the most thorough effort to examine the effects of digital technologies on development outcomes. The full report can be downloaded here.

The report draws, among other things, from 14 background papers that were prepared by international experts and World Bank staff. These background papers serve as additional reading for those who would like to examine certain issues more closely, such as social media, net neutrality, and the cybersecurity agenda.

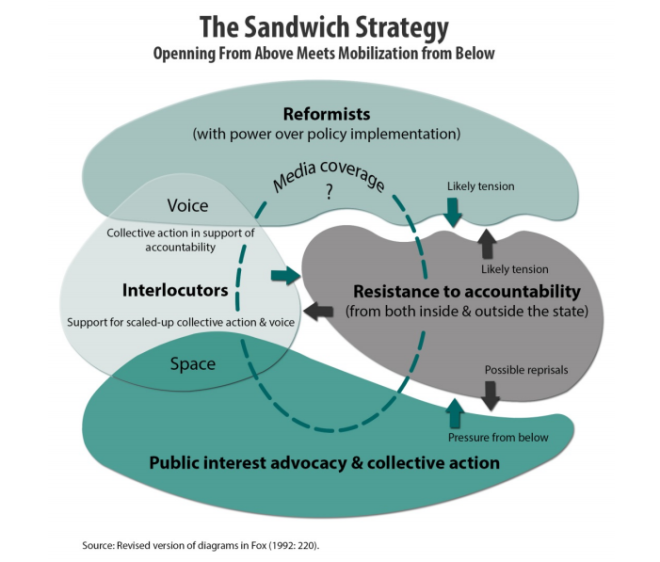

For those interested in citizen participation and civic tech, one of the papers written by Prof. Jonathan Fox and myself – When Does ICT-Enabled Citizen Voice Lead to Government Responsiveness? – might be of particular interest. Below is the abstract:

This paper reviews evidence on the use of 23 information and communication technology (ICT) platforms to project citizen voice to improve public service delivery. This meta-analysis focuses on empirical studies of initiatives in the global South, highlighting both citizen uptake (‘yelp’) and the degree to which public service providers respond to expressions of citizen voice (‘teeth’). The conceptual framework further distinguishes between two trajectories for ICT-enabled citizen voice: Upwards accountability occurs when users provide feedback directly to decision-makers in real time, allowing policy-makers and program managers to identify and address service delivery problems – but at their discretion. Downwards accountability, in contrast, occurs either through real time user feedback or less immediate forms of collective civic action that publicly call on service providers to become more accountable and depends less exclusively on decision-makers’ discretion about whether or not to act on the information provided. This distinction between the ways in which ICT platforms mediate the relationship between citizens and service providers allows for a precise analytical focus on how different dimensions of such platforms contribute to public sector responsiveness. These cases suggest that while ICT platforms have been relevant in increasing policymakers’ and senior managers’ capacity to respond, most of them have yet to influence their willingness to do so.

You can download the paper here.

Any feedback on our paper or models proposed (see below, for instance) would be extremely welcome.

unpacking user feedback and civic action: difference and overlap

I also list below the links to all the background papers and their titles

- When Does ICT-Enabled Citizen Voice Lead to Government Responsiveness? by Tiago Peixoto and Jonathan Fox

- Development Impact of Social Media by Robert Ackland and Kyosuke Tanaka

- The New Cybersecurity Agenda: Economic and Social Challenges to a Secure Internet by Johannes M. Bauer and William H. Dutton

- Multistakeholder Internet Governance? by William H. Dutton

- The Economics and Policy Implications of Infrastructure Sharing and Mutualisation in Africa by Jose Marino Garcia and Tim Kelly

- Guatemala, an Early Spectrum Management Reformer by Jose Marino Garcia

- Network Neutrality and Private Sector Investment by Jose Marino Garcia

- Best Practices and Lessons Learned in ICT Sector Innovation: A Case Study of Israel by Dr. Daphne Getz and Dr. Itzhak Goldberg (Researchers: Eliezer Shein, Bahina Eidelman, Ella Barzani)

- How Tech Hubs are helping to Drive Economic Growth in Africa by Tim Kelly and Rachel Firestone

- One Network Africa in East Africa by Tim Kelly and Christopher Kemei

- One Step Forward, Two Steps Backward? Does EGovernment make Governments in Developing Countries more Transparent and Accountable? by Victoria L. Lemieux

- Exploring the Relationship between Broadband and Economic Growth by Michael Minges

- Enabling Digital Entrepreneurs by Desirée van Welsum

- Sharing is caring? Not quite. Some observations about ‘the shaing economy’ by Desirée van Welsum

Enjoy the reading.