Monthly Archives: April 2016

Comparing Texts with Log-Likelihood Word Frequencies

One way to compare the similarity of documents is to examine the comparative log-likelihood of word frequencies.

This can be done with any two documents, but it is a particularly interesting way to compare the similarity of a smaller document with the larger body of text it is drawn from. For example, with access to the appropriate data, you may want to know how similar Shakespeare was to his contemporaries. The Bard is commonly credited with coining a large number of words, but it’s unclear exactly how true this is – after all, the work of many of his contemporaries has been lost.

But, imagine you ran across a treasure trove of miscellaneous documents from 1600 and you wanted to compare them to Shakespeare’s plays. You could do this by calculating the expected frequency of a given word and comparing this to the observed frequency. First, you can calculate the expected frequency as:

Where Ni is the total number of words in document i and Oi is the observed frequency of a given word in document i. That is, the expected frequency of a word is: (number of words in your sub corpus) * (sum of observed frequency in both corpora) / the number of words in both corpora.

Then, you can use this expectation to determine a word’s log-likelihood given the larger corpus as:

Sorting words by their log-likelihood, you can then see the most unlikely – eg, the most unique – words in your smaller corpus.

Catching Up on DemocracySpot

It’s been a while, so here’s a miscellaneous post with things I would normally share on DemocracySpot.

Yesterday the beta version of the Open Government Research Exchange (OGRX) was launched. Intended as a hub for research on innovations in governance, the OGRX is a joint initiative by NYU’s GovLab, MySociety and the World Bank’s Digital Engagement Evaluation Team (DEET) (which, full disclosure, I lead). As the “beta” suggests, this is an evolving project, and we look forward to receiving feedback from those who either work with or benefit from research in open government and related fields. You can read more about it here.

Today we also launched the Open Government Research mapping. Same story, just “alpha” version. There is a report and a mapping tool that situates different types of research across the opengov landscape. Feedback on how we can improve the mapping tool – or tips on research that we should include – is extremely welcome. More background about this effort, which brings together Global Integrity, Results for Development, GovLAB, Results for Development and the World Bank, can be found here.

Also, for those who have not seen it yet, the DEET team also published the Ev aluation Guide for Digital Citizen Engagement a couple of months ago. Commissioned and overseen by DEET, the guide was developed and written by Matt Haikin (lead author), Savita Bailur, Evangelia Berdou, Jonathan Dudding, Cláudia Abreu Lopes, and Martin Belcher.

aluation Guide for Digital Citizen Engagement a couple of months ago. Commissioned and overseen by DEET, the guide was developed and written by Matt Haikin (lead author), Savita Bailur, Evangelia Berdou, Jonathan Dudding, Cláudia Abreu Lopes, and Martin Belcher.

And here is a quick roundup of things I would have liked to have written about since my last post had I been a more disciplined blogger:

- A field experiment in Rural Kenya finds that “elite control over planning institutions can adapt to increased mobilization and participation.” I tend to disagree a little with the author’s conclusion that emphasizes the role of “power dynamics that allow elites to capture such institutions” to explain his findings (some of the issues seem to be a matter of institutional design). In any case, it is a great study and I strongly recommend the reading.

- A study examining a community-driven development program in Afghanistan finds a positive effect on access to drinking water and electricity, acceptance of democratic processes, perceptions of economic wellbeing, and attitudes toward women. However, effects on perceptions of government performance were limited or short-lived.

- A great paper by Paolo de Renzio and Joachim Wehner reviews the literature on “The Impacts of Fiscal Openness”. It is a must-read for transparency researchers, practitioners and advocates. I just wish the authors had included some research on the effects of citizen participation on tax morale.

- Also related to tax, “Consumers as Tax Auditors” is a fascinating paper on how citizens can take part in efforts to reduce tax evasion while participating in a lottery.

- Here is a great book about e-Voting and other technology developments in Estonia. Everybody working in the field of technology and governance knows Estonia does an amazing job, but information about it is often scattered and, sometimes, of low quality. This book, co-authored by my former colleague Kristjan Vassil, addresses this gap and is a must-read for anybody working with technology in the public sector.

- Finally, I got my hands on the pictures of the budget infograffitis (or data murals) in Cameroon, an idea that emerged a few years ago when I was involved in a project supporting participatory budgeting in Yaoundé (which also did the Open Spending Cameroon). I do hope that this idea of bringing data visualizations to the offline world catches up. After all, that is valuable data in a citizen-readable format.

picture by ASSOAL

picture by ASSOAL

I guess that’s it for now.

the most educated Americans are liberal but not egalitarian

Pew reports: “Highly educated adults – particularly those who have attended graduate school – are far more likely than those with less education to take predominantly liberal positions across a range of political values. And these differences have increased over the past two decades.” Indeed, “more than half of those with postgraduate experience (54%) have either consistently liberal political values (31%) or mostly liberal values (23%).”

Based on that finding, one might assume that the most educated Americans stand the furthest left on our political spectrum. And, based on that premise, one might conclude that …

- The people who would benefit most from left-of-center policies don’t support those policies, and the people who do support those policies don’t benefit from them–which is a paradox. OR …

- Liberal programs are special-interest subsidies for people with advanced educations (like lawyers, physicians, and teachers), and that is why they vote for them.

- Progressives tend to be smug or condescending because we tend to be highly educated and convinced that we support policies that are better for other people–and this is an unattractive attitude that loses votes.

- Colleges and graduate schools are moving people left (either because they have ideological agendas or because “reality has a liberal bias”).

I’d actually propose a different view from any of the above. Pew does not find that highly educated people are the furthest left. Rather, people with the most schooling consistently give answers that are labeled liberal on a set of 10 items that range over economic, foreign, and social policies. None of the survey questions offers a radical opinion as an option. So Pew is measuring consistency, not radicalism.

Ideological consistency is correlated with education, but not necessarily for a good reason. More book-learning makes you more aware of the partisan implications of adopting a stance on any particular issue. So, for instance, conservatives are more likely to disbelieve in global warming if they have more education–because their education helps them (as it helps everyone) to see the ideological valence of this issue.

Many of the most educated Americans endorse a certain basket of political ideas that are associated with the mainstream Democratic Party. They have learned to recognize these policies as the best ones, and the policies are designed to appeal to them. All of the positions are labeled “liberal,” so the most educated are deemed liberals. Yet the most educated are not the most committed to equality. Instead, they are quite comfortable with their advantages, even as they endorse positions that Pew calls liberal.

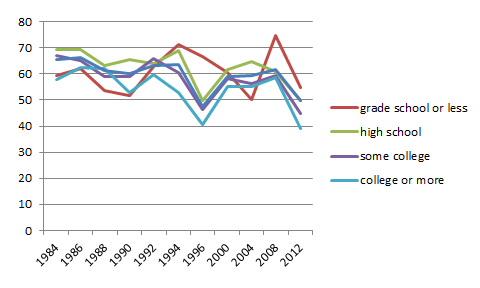

To test that hypothesis, I wanted to look at a survey question about equality that has been asked over a long time period with large samples. The best I found was this American National Election Study question: Do you agree that society should make sure everyone has equal opportunity? This is not an ideal measure, because support for equal opportunity is not the most egalitarian possible position. If you are very committed to equality, you may prefer equal outcomes. Still, the question provides useful comparative data.

In 2012, the more education you had, the less likely you were to favor equality of opportunity. The whole population was less supportive than they’d been in 2008 and less supportive than at any time in the 1980s. But the least educated were the least supportive of equality during the Reagan years, and now they are the most concerned about it.

As of 2012, the most educated Americans are the least egalitarian, even though they are consistently “liberal.” Less than half of them strongly favored equality of opportunity.

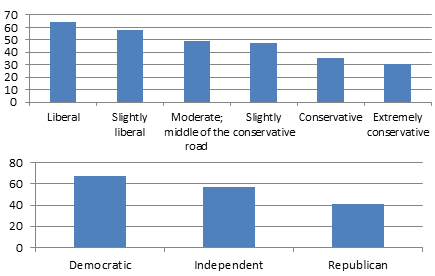

It’s true that Democrats and liberals are (as of 2012) more likely to support equal opportunity than Republicans and conservatives are–and that the highly educated are the most liberal. However, the correlations between egalitarianism and partisanship or ideology are not tight. Forty percent of Republicans strongly agree that society should make sure everyone has equal opportunity, as do 30 percent of extreme conservatives. This is partly because conservatives also have an equal opportunity agenda, and partly because the liberal-to-conservative scale is defined by a whole basket of issues. It’s quite possible to be a strong liberal and yet not believe strongly in equality. And I think that is a common view among the most educated Americans–who are also the most advantaged.

Register for 5/17 Tech Tuesday with Urban Interactive Studio

Registration is now open for May’s Tech Tuesday event with Urban Interactive Studio. Join us for this FREE event Tuesday, May 17th from 1:00-2:00pm Eastern/10:00-11:00am Pacific.

![]() Urban Interactive Studio helps agencies and consultants engage communities online through project websites and participation apps that can be tailored to any approach, process or project objective. As web and mobile technology has become commonplace, citizens now expect online participation options, but public engagement practitioners are often forced to cobble together inflexible off-the-shelf tools that only partially meet their engagement needs. UIS’s EngagingPlans and EngagingApps deliver a comprehensive, feature-rich, and adaptable solution that helps meet varied objectives at every project stage, from early visioning to final draft review and interactive online plans.

Urban Interactive Studio helps agencies and consultants engage communities online through project websites and participation apps that can be tailored to any approach, process or project objective. As web and mobile technology has become commonplace, citizens now expect online participation options, but public engagement practitioners are often forced to cobble together inflexible off-the-shelf tools that only partially meet their engagement needs. UIS’s EngagingPlans and EngagingApps deliver a comprehensive, feature-rich, and adaptable solution that helps meet varied objectives at every project stage, from early visioning to final draft review and interactive online plans.

EngagingPlans and EngagingApps inform and involve broad audiences while also generating actionable insights for decision makers. EngagingPlans bundles the most widely used features into one project hub that reaches, informs, and involves citizens and stakeholders in civic projects and decision making. EngagingPlans websites form the backbone of digital project communications, keeping documents, events, news, and FAQs accessible and collecting community feedback through surveys, discussions, idea walls, and draft reviews.

As projects progress beyond open-ended visioning, the choices for online engagement software decrease drastically. EngagingApps are semi-customizable mapping tools, workbooks, interactive plans, and simulators that encourage informed, specific feedback about topics like commuting habits, proposed land use designations, design concepts, growth scenarios, and funding allocation.

On this call, NCDD Member Chris Haller, Founder & CEO and Emily Crespin, Partnership Manager of Urban Interactive Studio will walk through examples of both EngagingPlans and EngagingApps in action, with particular emphasis on how EngagingApps can be configured to address unique participation objectives during each stage of any public process. Participants are encouraged to review the UIS website prior to Tech Tuesday and be prepared for a high-level overview of EngagingPlans and an in-depth discussion about EngagingApps and strategies for implementation.

Don’t miss out on this opportunity to see these tools in action – register today!

Tech Tuesdays are a series of learning events from NCDD focused on technology for engagement. These 1-hour events are designed to help dialogue and deliberation practitioners get a better sense of the online engagement landscape and how they can take advantage of the myriad opportunities available to them. You do not have to be a member of NCDD to participate in our Tech Tuesday learning events.

Tech Tuesdays are a series of learning events from NCDD focused on technology for engagement. These 1-hour events are designed to help dialogue and deliberation practitioners get a better sense of the online engagement landscape and how they can take advantage of the myriad opportunities available to them. You do not have to be a member of NCDD to participate in our Tech Tuesday learning events.

Engaging Ideas – 4/29

Workshop: Public Engagement Strategy Lab

Looking for assistance with organizing and sustaining productive public engagement? Struggling to decide how to combine online and face-to-face engagement? Frustrated with the standard "2 minutes at the microphone" public meeting? Want to know about the latest tools and techniques? Need expert advice on bringing together a diverse critical mass of people? In concert with the Tisch College for Civic Life at Tufts University, Public Agenda is offering a one-day workshop designed to help you tackle these key democratic challenges.

Join the Public Engagement Strategy Lab!

Who:

Leaders looking to revamp or strengthen their engagement strategies, structures and tools

Date:

Thursday, June 23, 2016

Time:

9:30am - 4:30pm

Location:

Tufts University Medical School

145 Harrison Avenue

Boston, Massachusetts 02111

Cost:

Early Bird $275 (by May 15, 2016)

Regular $350 (after May 15, 2016)

Student $75

Frontiers of Democracy Attendee $160 (must be registered with Frontiers of Democracy conference)

Registration Deadline:

Required by June 16, 2016, pending availability

Contact:

PE@publicagenda.org or call Mattie at 212-686-6610 ext.137

Space is limited. Register today!

During the workshop, Public Agenda trainers Matt Leighninger and Nicole Hewitt will:

- provide an overview of the strengths and limitations of public engagement today;

- help you assess the strengths and weaknesses of public engagement in your community;

- explore potential benefits of more sustained forms of participation,

- develop practical skills for planning for stronger engagement infrastructure; and

- demonstrate a mix of small group and large group discussions, interactive exercises, case studies and practical exercises.

This Strategy Lab is hosted by Tisch College, Tufts University as a preconference session for Frontiers of Democracy 2016. Participants in the Public Engagement Strategy Lab have the option of staying for the Frontiers of Democracy Conference. To register for the conference, click here.

The Public Engagement Strategy Lab will provide you with the tools and resources you need to authentically engage stakeholders in thoughtful, democratic processes. No more public forums and community meetings that lack impact. Move your public engagement planning forward with approaches based on the ideas and examples found in Public Participation for 21st Century Democracy (Wiley-Blackwell, 2015).

To register, follow this link. We hope to see you there!

Linguistic Clues and Evidence Relationships

Earlier in the week, I wrote about the theory of coherent argument structure introduced by Robin Cohen in 1987. Her model also included two other elements: a theory of linguistic clue interpretation and a theory of evidence relationships. These theories, the focus of today’s post, are both closely connected to each other as well as to the theory of argument structure.

Theory of Linguistic Clue Interpretation

Cohen’s theory of linguistic clue interpretation argues for the existence of clue words; “those words and phrases used by the speaker to directly indicate the structure of the argument to the hearer.” Capable of being identified through simple n-gram models as well as more sophisticated means, these linguistic cues, or discourse markers, are a common feature of argument mining. Cohen outlines several common clue types such as redirection, which re-direct the hearer to an earlier part of the argument (“returning to…”) and connection, a broad category encompassing clues of inference (“as a result of…”), clues of contrast (“on the other hand…”), and clues of detail (“specifically…”). Most notably, though, Cohen argues that clues are necessary for arguments whose structure is more complex than those covered by the coherent structure theory. That is, the function of linguistic cues is not “to merely add detail on the interpretation of the contained proposition, but to allow that proposition an interpretation that would otherwise be denied” (Cohen 1987).

Discourse markers are also “strongly associated with particular pragmatic functions,” (Abbott, Walker et al. 2011) making them valuable for sentiment analysis tasks such as determining agreement or disagreement. This is the approach Abbott, Walker et al. used in classifying types of arguments within a corpus of 109,553 annotated posts from an online forum. Since the forum allowed for explicitly quoting another post, Abbott, Walker et al. identified 8,242 quote-response pairs, where a user quoted another post and then added a comment of their own.

In addition to the classification task of determining whether the response agrees or disagrees with the preceeding quote, the team analysed the pairs on a number of sentiment spectrums: respect/insult, fact/emotion, nice/nasty, and sarcasm. Twenty discourse markers identified through manual inspection of a subset of the corpus, as well as the use of “repeated punctuation” served as key features in the analysis.

Using a JRip classifier built on n-gram and bi-gram discourse markers, as well as a handful of other features such as post meta-data and topic annotations, Abbott, Walker et al. found the best performance (0.682 accuracy, compared to a 0.626 unigram baseline) using local features from both the quote and response. This indicates that the contextual features do matter, and, in the words of the authors, vindicates their “interest in discourse markers as cues to argument structure” (Abbott, Walker et al. 2011).

While these discourse markers can provide vital clues to a hearer trying to reconstruct an argument, relying on them in a model requires that a speaker not only try to be understood, but be capable of expressing themselves clearly. Stab and Gurevych, who are interested in argument mining as a tool for automating feedback on student essays, argue that discourse markers make a poor feature, since, in their corpora, these markers are often missing or even misleadingly used (Stab and Gurevych 2014). Their approach to this challenge will be further discussed in the state of the art section of this paper.

Theory of Evidence Relationships

The final piece of Cohen’s model is evidence relationships, which explicitly connect one argument to another and govern the verification of evidence relations between propositions (Cohen 1987). While the coherent structure principle lays out the different forms an argument may take, evidence relationships are the logical underpinnings that tie an argument’s structure together. As Cohen explains, the pragmatic analysis of evidence relationships is necessary for the model because the hearer needs to be able to “recognize beliefs of the speaker, not currently held by the hearer.” That is, whether or not the hearer agrees with the speaker’s argument, the hearer needs to be able to identify the elements of the speaker’s argument as well as the logic which holds that argument together.

To better understand the role of evidence relationships, it is helpful to first develop a definition of an “argument.” In its most general form, an argument can be understood as a representation of a fact as conveying some other fact. In this way, a complete argument requires three elements: a conveying fact, a warrant providing an appropriate relation of conveyance, and a conveyed fact (Katzav and Reed 2008). However, one or more of these elements often takes the form of an implicit enthymeme and is left unstated by the speaker. For this reason, some studies model arguments in their simplest form as a single proposition, though humans generally require at least two of the elements to accurately distinguish arguments from statements (Mochales and Moens 2011).

The ubiquity of enthymemes in both formal and informal dialogue has proved to be a significant challenge for argument mining. Left for the hearer to infer, these implicit elements are often “highly context-dependent and cannot be easily labeled in distant data using predefined patterns” (Habernal and Gurevych 2015). It is important to note that the existence of these enthymemes does not violate the initial assumption that a speaker argues with the intent of being understood by a hearer. Rather, enthymemes, like other human heuristics, provide a computational savings to a hearer/listener pair with a sufficiently shared context. Thus, enthymemes indicate the elements of an argument that a speaker assumes a listener can easily infer, a particular challenge when a speaker is a poor judge of the listener’s knowledge or when the listener is an AI model.

To complicate matters further, there are no definitive rules for the roles enthymemes may take. Any of an argument’s elements may appear as enthymemes, though psycholinguistic evidence indicates that the relationship of conveyance between two facts, the argument’s warrant, is most commonly left implicit (Katzav and Reed 2008). Similarly, the discourse markers which might otherwise serve as valuable clues for argument reconstruction “need not appear in arguments and thus cannot be relied upon” (Katzav and Reed 2008). All of this poses a significant challenge.

In her work, Cohen bypasses this problem by relying on an evidence oracle which takes two propositions, A and B, and responds ‘yes’ or ‘no’ as to whether A is evidence for B (Cohen 1987). In determining argument relations, Cohen’s oracle identifies missing premises, verifies the plausibility of these enthymemes, and ultimately concludes that an evidence relation holds if the missing premise is deemed plausible. In order to be found plausible, the inferred premise must be both plausibly intended by the speaker and plausibly believed by the hearer. In this way, the evidence oracle determines the structure of the argument while also overcoming the presence of enthymemes.

Decreasing reliance on such an oracle, Katzav and Reed develop a model to automatically determine the evidence relations between any pair of propositions within a text. Their model allows for two possible relations between an observed pair of argumentative statements: a) one of the propositions represents a fact which supposedly necessitates the other proposition (eg, missing conveyance), or b) one proposition represents a conveyance which, together with a fact represented by the other proposition, supposedly necessities some missing proposition (eg, missing claim) (Katzav and Reed 2008). The task then is to determine the type of relationship between the two statements and use that relationship to reconstruct the missing element.

Their notable contribution to argumentative theory is to observe that arguments can be classified by type (eg, “causal argument”), and that this type constrains the possible evidence relations of an argument. Central to their model is the identification of an argument’s warrant; the conveying element which defines the relationship between fact A and fact B. Since this is the element which is most often an enthymeme, Katzav and Reed devote significant attention to reconstructing an argument’s warrant from two observed argumentative statements. If, on the other hand, the observed pair fall into type b) above, with the final proposition missing, then the process is trivial: “the explicit statement of the conveying fact, along with the warrant, allows the immediate deduction of the implicit conveyed fact” (Katzav and Reed 2008).

This framework cleverly redefines the enthymeme reconstruction challenge. Katzav and Reed argue that no relation of conveyance can reasonably be thought to relate just any type of fact to any other type of fact. Therefore, given two observed propositions, A and B, a system can narrow the class of possible relations to warrants which can reasonably be thought to relate facts of the type A to facts of the type B. Katzav and Reed find this to be a “substantial constraint” which allows a system to deduce a missing warrant by leveraging a theory of “which relations of conveyance there are and of which types each such relation can reasonably be thought to relate” (Katzav and Reed 2008).

While this approach does represent an advancement over Cohen’s entirely oracle-dependent model, it is not without its own limitations. For successful warrant recovery, Katzov and Reed require a corpus with statements annotated with the types of facts they represent and a system with relevant background information similarly marked up. Furthermore, it requires a robust theory of warrants and relations, a subject only loosely outlined in their 2008 paper. Reed has advanced such a theory elsewhere, however, through his collaborations with Walton. This line of work is picked up by Feng and Hirst in a slightly different approach to enthymeme reconstruction.

Before inferring an argument’s enthymemes, Feng and Hirst argue, one must first classify an argument’s scheme. While a warrant defines the relation between two propositions, a scheme is a template which may incorporate more than two propositions. Unlike Cohen’s argument structures, the order in which statements occur does not affect an argument’s scheme. A scheme, then, is a flexible model which incorporates elements of Cohen’s coherent structure theory with elements of her evidence relations theory.

Drawing on the 65 argument schemes developed by Walton, et al. in 2008, Feng and Hirst seek to classify arguments under the five most common schemes. While their ultimate goal is to infer enthymemes, their current work takes this challenge to primarily be a classification task – once an argument’s scheme is properly classified, reconstruction can proceed as a simpler task. Under their model, an argument mining pipeline would reconstruct an argument’s scheme, fit the stated propositions into the scheme, and then use this template to infer enthymemes (Feng and Hirst 2011).

Working with 393 arguments from the Araucaria dataset, Feng and Hirst achieved over 90% best average accuracies for two of their schemes, with three other schemes rating in the 60s and 70s. They did this using a range of sentence and token based features, as well as a “type” feature, annotated in their dataset, which indicates whether the premises contribute to the conclusion in linked or convergent order (Feng and Hirst 2011).

A “linked” argument has two or more interdependent propositions which are all necessary to make the conclusion valid. In contrast, exactly one premise is sufficient to establish a valid conclusion in a “convergent” argument (Feng and Hirst 2011). They found this type feature to improve classification accuracy in most cases, though that improvement varied from 2.6 points for one scheme to 22.3 points for another. Unfortunately, automatically identifying an argument’s type is not an easy task in itself and therefore may not ultimately represent a net gain in enthymeme reconstruction. As future work, Feng and Hirst propose attempting automatic type classification through rules such as defining one premise to be linked to another if either would become an enthymeme if deleted.

While their efforts showed promising results in scheme classification, it is worth noting that best average accuracies varied significantly by scheme. Their classifier achieved remarkable results for an “argument from example” scheme (90.6%) and a “practical reasoning” scheme (90.8%). However, the schemes of “argument from consequences” and “argument from classification” were not nearly as successful – achieving only 62.9% and 63.2% best average accuracy respectively.

Feng and Hirst attribute this disparity to the low-performing schemes not having “such obvious cue phrases or patterns as the other three schemes which therefore may require more world knowledge encoded” (Feng and Hirst 2011). Thus, while the scheme classification approach cleverly merges the challenges introduced by Cohen’s coherent structure and evidence relationship theories, this work also highlights the need to not neglect the challenges of linguistic cues.

new article: “Join a club! Or a team – both can make good citizens”

This new article explores reasons that k-12 athletics may boost civic engagement, as well as some important differences between sports and civic life. Student associations in general teach civic skills, and sports are best understood as examples of associations. Indeed, high school teams should be more like standard school clubs, in which participation is voluntary and the students are primarily responsible for managing the group.

Citation: Peter Levine, “Join a club! Or a team – both can make good citizens,” Phi Delta Kappan, vol. 97, no. 8 (May 2016), pp 24-27

It’s Your Money. Where’s Your Say?

The article, It’s Your Money. Where’s Your Say? written by Larry Schooler was published February 2016 on Huffpost Politics blog. Schooler discusses the juxtaposition of some governments relationship with the public- some increasing transparency and public engagement experiences, while others are quick to restrict public’s access to information and public control of the state’s budget. The article tips hat to the use of Balancing Act [from Engaged Public] in San Antonio (TX), and the steady increase in participatory budgeting processes around the US. Below is the article in full and you can find the original on Huffpost blog here.

In our private lives, we have quite a bit of say over how we spend our money. Granted, an employer or client ultimately decides whether and what amount to pay us, but if we want to spend more on a house than on vacations, or more on our children’s education than on dining out, that’s our decision.

Seems strange, then, that when it comes to our tax money, our say often doesn’t amount to much. Often cities, counties, states, and the federal government take minimal input from the public on their budgets—ironic, given that a budget is widely viewed as the single most important policy a government approves. It’s almost like taking the decision about what house to buy away from the homebuyer.

I have been thinking about this for a few, somewhat contradictory reasons. I read this piece from Arizona, where the Governor decided to increase both transparency and input opportunities on his state’s budget, but the state House decided “not to allow public comment during departmental budget hearings to be held over the coming weeks.” Scheduled public hearings won’t help much because they’ll happen without any legislative budget to discuss.

On the other hand, more and more cities and state agencies are taking steps to increase public involvement in the city’s budget. San Antonio, Texas, used the user-friendly tool “Balancing Act” (in both English and Spanish) to help the public get their hands dirty figuring out how to manage city finances. Other cities, including Hartford, Connecticut, have taken similar steps with online budgeting tools. And in Austin, the “Budget in a Box“ gives groups and individuals a chance to talk with one another about how to manage city finances.

In San Antonio, officials found more than 1,200 unique users spent an average of more than ten minutes on their tool, suggesting they did more than offer knee-jerk reactions to the questions of what to fund and how much. But if this were just a game to make the public feel better, I would not have taken note. On the contrary: the city’s adopted budget for Fiscal Year 2016 (their largest ever) included a $23 million increase in street maintenance and a $10 million increase in sidewalk/pedestrian safety, all while reducing property taxes, all of which were the priorities citizens had in their responses.

Perhaps even more interesting is the explosion in the use of “participatory budgeting,” which got its start in Brazil but has spread to cities small and large across the U.S. This strategy gives the public the ultimate say over the way a portion of the budget gets spent, through a secret ballot vote that the sponsoring city or other agency commits to respect and implement.

So, why such a contrast? Why would some government officials want to keep the public out of budgeting and others bring the public closer in? As to the latter, an interesting study in, of all places, Russia found that participatory budgeting actually “increased local tax revenues collection, channeled larger fractions of public budgets to services stated as top priorities by citizens, and increased satisfaction levels with public services. “ It stands to reason that if you ask the public what they want to buy, and how much they want spent on those services, they’ll be happier.

As to why public input into budgeting might not be such a good idea, perhaps elected officials fear that greater exposure of their budgetary decisions would shine a brighter light on questionable moves that favor top donors or influential special interest groups, rather than the public as a whole. Perhaps they think that increased engagement would take too much extra time or, ironically, cost a lot more money than the status quo. But that perspective overlooks the unintended consequences that can accompany budget choices made without the public: the ongoing Texas public school finance fight comes to mind. Neither time nor money was saved; if anything, it’s taken a lot longer and cost a lot more than lawmakers ever intended.

To be sure, changes in the way governments involve the public in budgeting would require a paradigm shift for just about everyone: elected officials, public administrators, and even the public themselves. But it could end up making it easier for lawmakers to stomach otherwise difficult choices around tax rates and funding levels. After all, if a lawmaker can show how a budget truly reflects what the constituents want, that’s worth a lot in political, and campaign, capital.

About Larry Schooler

Larry Schooler manages community engagement, public participation, and alternative dispute resolution projects for the City of Austin, Texas, where he conducts small and large group facilitation, strategic planning, collaborative problem solving, consensus building, and mediation sessions. Larry also works as a mediator, facilitator, and public engagement consultant for outside clients. He is a senior fellow at the Annette Strauss Institute for Civic Life at the University of Texas; on the faculty of Southern Methodist University; and Past President for the International Association for Public Participation (IAP2-USA). His work has been featured by a variety of organizations and publications, including Governing Magazine and the National League of Cities.

Resource Link: www.huffingtonpost.com/larry-schooler/its-your-money-wheres-you_b_9114234.html