Open government’s uncertain effects and the Biden opportunity: what now?

A review of 10 years of open government research reveals: 1) “a transparency-driven focus”, 2) “methodological concerns”, and 3) [maybe not surprising] “the lack of empirical evidence regarding the effects of open government”. My take on this is that these findings are, somewhat, self-reinforcing.

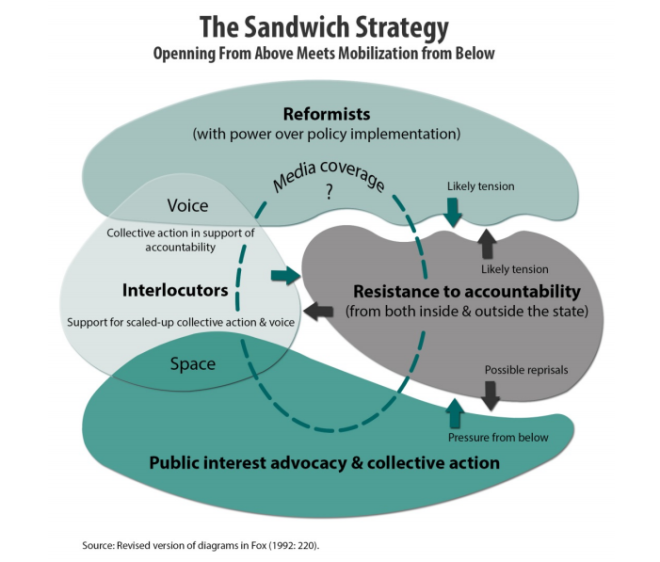

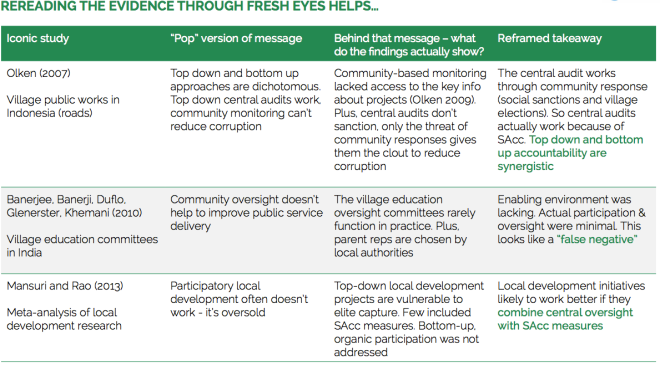

First, the early focus on transparency by open government advocates, while ignoring the conditions under which transparency could lead to public goods, should be, in part, to blame. This is even more so if open government interventions insist on tactical, instead of strategic approaches to accountability. Second, the fact that many of those engaging in open government efforts do not take into account the existing evidence doesn’t help in terms of designing appropriate reforms, nor in terms of calibrating expectations. Proof of this is the recurrent and mostly unsubstantiated spiel that “transparency leads to trust”, voiced by individuals and organizations who should have known better. Third, should there be any effects of open government reforms, these are hard to verify in a credible manner given that evaluations often suffer from methodological weaknesses, as indicated by the paper.

Finally, open government’s semantic extravaganza makes building critical mass all the more difficult. For example, I have my doubts over whether the paper would reach similar conclusions should it have expanded the review to open government practices that, in the literature, are not normally labeled as open government. This would be the case, for instance, of participatory budgeting (which has shown to improve service delivery and increase tax revenues), or strategic approaches to social accountability that present substantial results in terms of development outcomes.

In any case, the research findings are still troubling. The election of President Biden gives some extra oxygen to the open government agenda, and that is great news. But in a context where autocratization turns viral, making a dent in how governments operate will take less policy-based evidence searching and more evidence-based strategizing. That involves leveraging the existing evidence when it is available, and when it is not, the standard path applies: more research is needed.

Open Government Partnership and Justice

On another note, Joe Foti, from the Open Government Partnership (OGP), writes on the need to engage more lawyers, judges and advocates in order to increase the number of accountability-focused OGP commitments. I particularly like Joe’s ideas on bringing these actors together to identify where OGP commitments could be stronger, and how. This resonates with a number of cases I’ve come across in the past where the judiciary played a key role in ensuring that citizens’ voice also had teeth.

I also share Joe’s enthusiasm for the potential of a new generation of commitments that put forward initiatives such as specialized anti-corruption courts and anti-SLAPP provisions. Having said this, the judiciary itself needs to be open, independent and capable. In most countries that I’ve worked in, a good part of open government reforms fail precisely because of a dysfunctional judiciary system.

Diversity, collective intelligence and deliberative democracy

Part of the justification for models of deliberative democracy is their epistemic quality, that is, large and diverse crowds are smarter than the (elected or selected) few. A good part of this argument finds its empirical basis in the fantastic work by Scott Page.

But that’s not all. We know, for instance, that gender diversity on corporate boards improves firms’ performance, ethnic diversity produces more impactful scientific research, diverse groups are better at solving crimes, popular juries are less biased than professional judges, and politically diverse editorial teams produce higher-quality Wikipedia articles. Diversity also helps to explain classical Athens’ striking superiority vis-à-vis other city-states of its time, due to the capacity of its democratic system to leverage the dispersed knowledge of its citizens through sortition.

Now, a Nature article, “Algorithmic and human prediction of success in human collaboration from visual features”, presents new evidence of the power of diversity in problem-solving tasks. In the paper, the authors examine the patterns of group success in Escape The Room, an adventure game in which a group attempts to escape a maze by collectively solving a series of puzzles. The authors find that groups that are larger, older and more gender diverse are significantly more likely to escape. But there’s an exception to that: more age diverse groups are less likely to escape. Intriguing isn’t it?

Deliberative processes online: rough review of the evidence

As the pandemic pushes more deliberative exercises online, researchers and practitioners start to take more seriously the question of how effective online deliberation can be when compared to in-person processes. Surprisingly, there are very few empirical studies comparing the two methods.

But a quick run through the literature offers some interesting insights. For instance, an online 2004 deliberative poll on U.S. foreign policy, and a traditional face-to-face deliberative poll conducted in parallel, presented remarkably similar results. A 2007 experiment comparing online and face-to-face deliberation found that both approaches can increase participants’ issue knowledge, political efficacy, and willingness to participate in politics. A similar comparison from 2009 looking at deliberation over the construction of a power plant in Finland found considerable resemblance in the outcomes of online and face-to-face processes. A study published in 2012 on waste treatment in France found that, compared to the offline process, online deliberation was more likely to: i) increase women’s interventions, ii) promote the justification or arguments, and iii) be oriented towards the common good (although in this case the processes were not similar in design).

The external validity of these findings, however encouraging they may be, remains an empirical question. Particularly given that since these studies were conducted the technology used to support deliberations has in many cases changed (e.g. from written to “zoomified” deliberations). Anyhow, kudos should go to the researchers who started engaging with the subject well over a decade ago: if that work was a niche subject then, their importance now is blatantly obvious.

(BTW, on a related issue, here’s a fascinating 2021 experiment examining whether online juries can make consistent, repeatable decisions: interestingly, deliberating groups are much more consistent than non-deliberating groups)

Fixing the Internet?

Anne Applebaum and Peter Pomerantsev published a great article in The Atlantic on the challenges to democracy by an Internet model that fuels disinformation and polarization, presenting alternative paths to address this. I was thankful for the opportunity to make a modest contribution to such a nice piece.

At the same time, an excellent Twitter thread by Levi Boxel is a good reminder that sometimes we may be overestimating some of the effects of the Internet on polarization. Levi highlights three stylized facts with regards to mass polarization: i) it’s been increasing since at least the 1980’s in the US, ii) it’s been increasing more quickly among old age groups in the US, and iii) in the past 30 years countries present different patterns of polarization despite similar Internet usage.

Of course, that doesn’t mean we shouldn’t be concerned about the effects of the Internet in politics. For instance, a new study in the American Political Science Review finds that radical right parties benefit more than any other parties from malicious bots on social media.

Open democracy

2021 continues to be a good year for the proponents of deliberative democracy, with growing coverage of the subject in the mainstream media, in part fueled by the recent launch of Helène Landemore’s great book “Open Democracy.” Looking for something to listen to? Look no further and listen to this interview by Ezra Klein with Helène.

A dialogue among giants

The recording of the roundtable Contours of Participatory Democracy in the 21st Century is now available. The conversation between Jane Mansbridge, Mark Warren and Cristina Lafont can be found here.

Democracy and design thinking

Speaking of giants, the new book by Michael Saward “Democratic Design”, is finally out. I’m a big fan of Michael’s work, so my recommendation may be biased. In this new book Michael brings design thinking together with democratic theory and practice. If the design of democratic institutions is one of your topics, you should definitely check it out!

Civic Tech

I was thrilled to have the opportunity to deliver a lecture at the Center for Collective Learning – Artificial and Natural Intelligence Institute. My presentation, Civic Technologies: Past, Present and Future, can be found here.

Scholar articles:

And finally, for those who really want to geek-out, a list of 15 academic articles I enjoyed reading:

Foster, D., & Warren, J. (2021). The politics of spatial policies. Available at SSRN 3768213.

Hanretty, C. (2021). The Pork Barrel Politics of the Towns Fund. The Political Quarterly.

Eubank, N., & Fresh, A. Enfranchisement and Incarceration After the 1965 Voting Rights Act.

Campbell, T. (2021). Black Lives Matter’s Effect on Police Lethal Use-of-Force. Available at SSRN.

Miscellaneous radar:

- Modern Grantmaking: That’s the title of a new book by Gemma Bull and Tom Steinberg. I had the privilege of reading snippets of this, and I can already recommend it not only to those working with grantmaking, but also pretty much anyone working in the international development space.

- Lectures: The Center for Collective Learning has a fantastic line-up of lectures open to the public. Find out more here.

- Learning from Togo: While unemployment benefits websites were crashing in the US, the Togolese government showed how to leverage mobile money and satellite data to effectively get cash into the hands of those who need it the most

- Nudging the nudgers: British MPs are criticising academics for sending them fictitious emails for research. I wonder if part of their outrage is not just about the emails, but about what the study could reveal in terms of their actual responsiveness to different constituencies.

- DataViz: Bringing data visualization to physical/offline spaces has been an obsession of mine for quite a while. I was happy to come across this project while doing some research for a presentation

Enjoy the holiday.